Section: New Results

Research Methods

Participants : Michel Beaudouin-Lafon, Anastasia Bezerianos, Jérémie Garcia, Stéphane Huot, Ilaria Liccardi, Wendy Mackay [correspondant] , Justin Mathew.

Conducting empirical research is a fundamental part of InSitu's research activities, including observation of users in field and laboratory settings to discover problems faced by users, controlled laboratory experiments to evaluate the effectiveness of the technologies we develop, longitudinal field studies to determine how our technologies work in the real world, and participatory design, to explore design possibilities with users throughout the design process.

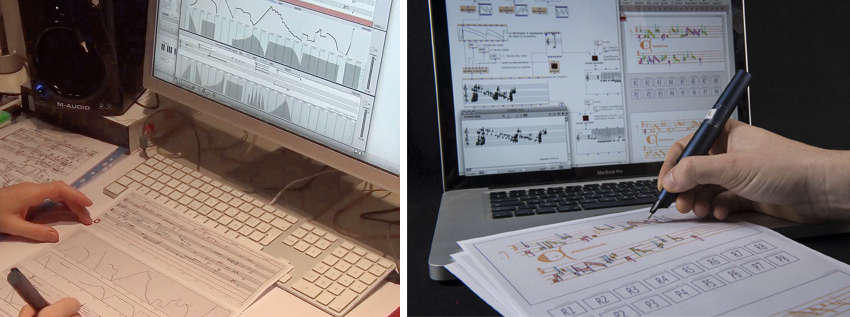

Computer-aided Composition – We designed Polyphony [20] , a novel interface for systematically studying all phases of computer-aided composition, and then used it to observe expert creative behavior. Polyphony is a unified user interface that integrates interactive paper and electronic user interfaces for composing music. We asked 12 composers to use it (figure 7 -left) to compose an electronic accompaniment to a 20-second instrumental composition by Anton Webern. The resulting dozen comparable snapshots of the composition process reveal how composers both adapt and appropriate tools in their own way. In collaboration with IRCAM, we also conducted a longitudinal study where we closely collaborated with composer Philippe Leroux [19] in the creation of his piece Quid sit musicus. The composer used our interfaces based on interactive paper along with an OpenMusic library to generate compositional material for this work (figure 7 -right).

|

Multitouch Gestures – We created a design space of simple multitouch gestures that designers of user interfaces can systematically explore to propose more gestures to users [27] . We further considersed a set of 32 gestures for tablet-sized devices, by developing an incremental recognition engine that works with current hardware technology, and empirically testing the usability of those gestures. In our experiment, individual gestures were recognized with an average accuracy of ∼90%, and users successfully achieved some of the transitions between gestures without the use of explicit delimiters. The goal of this work is to assist designers in optimizing the use of the rich multi-touch input channel for the activation of discrete and continuous controls, and to enable fluid transitions between controls, e.g. when selecting text over multiple views, manipulating different degrees of freedom of a graphical object or invoking a command and setting its parameter values in a row.

Spatial Audio – We investigated the issues of spatialization techniques for object-based audio production and introduced the Spatial Audio Design Spaces framework (SpADS) [25] , which describes the spatial manipulation of object-based audio. These design spaces are based on interviews with professional sound engineers and on a morphological analysis of 3D audio objects that clarifies the relationships between recording and rendering techniques that define for 3D speaker configurations. This will allow us to analyze and design novel advanced object-based controllers.

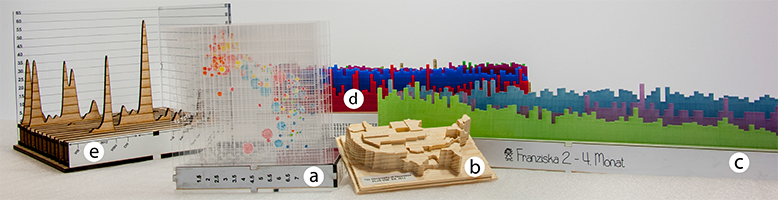

Physical Visualizations – We studied the design process of physical visualizations. An increasing variety of such visualizations are being built, for purposes ranging from art and entertainment to business analytics and scientific research. However, crafting them remains a laborious process and demands expertise in both data visualization and digital fabrication. We analyzed the limitations of current workflows through three real case studies and created MakerVis, the first tool that integrates the entire workflow, from data filtering to physical fabrication (figure 8 ). Design sessions with three end users showed that tools such as MakerVis can dramatically lower the barriers behind producing physical visualizations. Observations and interviews also revealed important directions for future research. These include rich support for customization, and extensive software support for materials that accounts for their unique physical properties as well as their limited supply.